Foreword by Herbert E. Huppert FRS

Chapter 1: The unconscious brain

Chapter 2: What is lucidity? What is understanding?

Chapter 3: Mindsets, evolution, and language

Chapter 4: Acausality illusions, and the way perception works

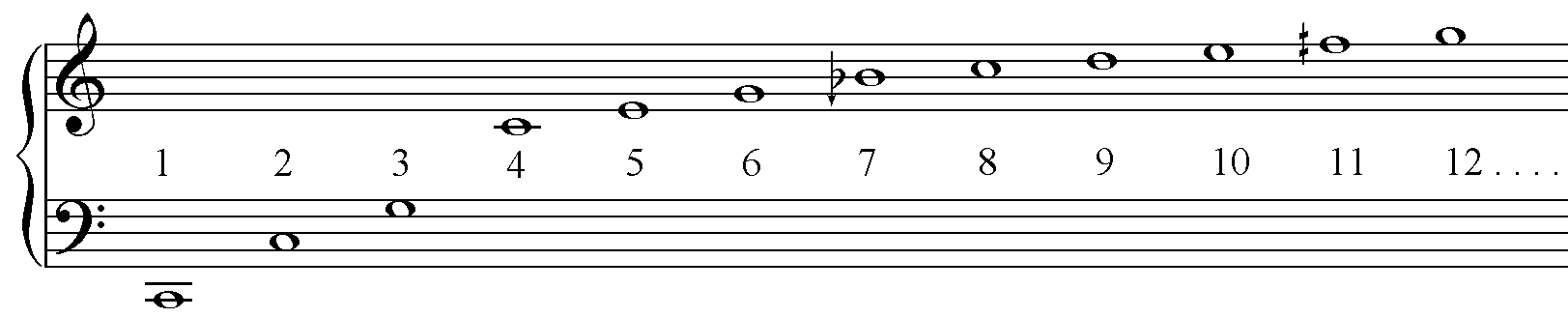

Chapter 6: Music, mathematics, and the Platonic

Postlude: The amplifier metaphor for climate

Michael Edgeworth McIntyre FRS has written a book wide in its intellectual range and provocative in its implications. That will surprise no-one who knows him. His deep yet expansive vision of the world goes back to his ancestry and upbringing. His father, Archie McIntyre FAA, was a respected neurophysiologist and his mother, Anne McIntyre, was an accomplished visual artist. His great-grandfather Sir Edgeworth David FRS was a famous geologist and Antarctic explorer.

Michael’s childhood experiences, in Australia and New Zealand, included observing his father’s experimental skill, dissecting out individual nerve fibres in a laboratory full of electronic amplifiers and oscilloscopes. That was in the early days of micro-electrodes able to record from a single nerve cell. Michael also remembers being fascinated as a small boy by the sound of Beethoven’s Eighth Symphony made visible on an oscilloscope. He became very curious about how that sound — the sound of the “marvellously life-enhancing, energetic first movement”, as he described it — was related, somehow, to the wiggling green line on the oscilloscope tube.

In his teens Michael became a skilled violinist, and in 1960 rose to be leader of the New Zealand National Youth Orchestra. Such was his talent and his love of music that, after coming to Cambridge to work for a doctorate in fluid dynamics, he considered becoming a full-time professional musician — and indeed did become a part-time professional, and a member of the British Musicians’ Union, for some years. However, to our great good fortune, mathematics and science exerted a stronger pull in the end. He continues to play violin and viola and to passionately explore the links of music to other fields, notably neurology.

The book is divided into sections, the anecdotal narratives dancing gracefully with the scholarly enquiries, explicit or implicit, around the opening question “What is science?”

Rarely in this era of super-specialization does one encounter a true polymath, but that is a fair title to bestow on Michael. He has made original contributions to the fundamental understanding of fluid dynamics in the Earth’s atmosphere, in its oceans and in the Sun’s interior. He writes persuasively about the importance of lucidity and “lucidity principles”, whether in writing and speaking, or in designing a nuclear reactor (and everything in between). He is concerned about the unconscious assumptions that impede human progress, not least those stemming from our evolutionarily ancient dualism or “dichotomization instinct”, which “makes us stupid” in the manner now so dangerously amplified by the social media, through their “artificial intelligences that aren’t yet very intelligent”. But we can still hope that “smarter robots could be a game-changer in helping us humans to get smarter too.” Michael argues for the importance of scientific ethics, and reminds us of the limits of our epistemologies.

Michael is an engaging companion on this highly informed but charmingly informal odyssey. He invites the reader to see new landscapes through new lenses, and, crucially, to make new connections in all sorts of ways.

This book brings together a set of interconnected insights from the sciences and the arts that are so deep, and so basic, I think, in so many ways, that they deserve to be better known than they are. They include insights into communication skills, and into the power and limitations of science. They are ‘deep’ in the sense of being deep in our nature, and evolutionarily ancient. I go on to discuss, at the end, what science can and can’t tell us about the climate problem.

So I hope that the book will interest scientifically-minded readers, and especially young scientists. I’ve tried to bring out the connections in a widely understandable way that avoids equations and technical jargon. The book draws on a lifetime’s personal experience as a scientist, as a mathematician, and as a musician.

It hardly needs saying that if you’re a young scientist you need to hone your communication skills. In our complex world such skills are needed not only between scientists and the public, but also between scientists in different specialties. I’ll argue that it’s useful to know, for instance, how the skilful use of written and spoken language can be informed by the way music works — music of any genre.

Good communication will be crucial to tackling today’s and tomorrow’s great problems including the problems of climate change, biodiversity, and future pandemics and, now more urgently than ever, the problem of understanding the strengths and weaknesses of artificial intelligence. One obstacle to understanding is the misconception — it’s sometimes called the ‘singularity fallacy’ — that there’s only one kind of intelligence, and only one measure of intelligence.

To develop our understanding of how communication works it’s useful and interesting to consider the origins of human language. It’s now known that biological evolution gave rise to our language ability in a manner quite different from what popular culture says. And evolution is itself now better understood. Rather than a simple competition between selfish genes it’s more like turbulent fluid flow, in some ways — a complex process spanning a vast range of timescales. I try to discuss those points very carefully, since they’re still contentious.

A further aspect of evolution is that, again contrary to popular belief, there’s a genetic basis not only for the nastiest but also for the most compassionate, most cooperative parts of human nature.

Throughout the book I’ve aimed for high-quality reasoning and respect for evidence. I’ve tried to keep the main text as short as possible, and readable without reference to the voluminous endnotes. I recommend ignoring the endnotes on a first read. However, as well as adding to the arguments, the endnotes give what I hope are enough literature references to support what I say. The literature is moving fast; and this second edition incorporates a number of significant updates. One of them is a truly remarkable new book118 by my climate-science colleague Tim Palmer FRS.

Note regarding video and audio clips: Figure 1 below presents an animated video clip that is basic to many of my arguments. To see the animation, click on the black square in the figure. (The black squares in this and some other figures are placeholders for QR codes in the published version, where they provide appropriate audiovisual links. Those links are duplicated in the published supplementary material, at https://www.worldscientific.com/worldscibooks/10.1142/13429#t=suppl.)

Acknowledgements: Many kind friends and colleagues have helped me with advice, encouragement, information, and critical comments over the years. I have learned much from experts on the latest developments in systems biology, evolutionary theory, and palaeoclimatology. Beyond those mentioned in the acknowledgements sections and endnotes of my original Lucidity and Science papers34, 75, 130 I’d like to thank Dorian Abbot, Leslie Aiello, David Andrews, Paul Ashworth, Grigory Barenblatt, Terry Barker, George Batchelor, Pat Bateson, Liz Bentley, Francis Bretherton, Oliver Bühler, Frances Cairncross, David Crighton, Ian Cross, Judith Curry, Philip Dawid, David Dritschel, Kuniyoshi Ebina, George Ellis, Sue Eltringham, Kerry Emanuel, Matthew England, David Fahey, Georgina Ferry, Rupert Ford, Angela Fritz, Chris Garrett, Jeffrey Ginn, Douglas Gough, Richard Gregory, Stephen Griffiths, Joanna Haigh, Peter Haynes, Isamu Hirota, Brian Hoskins, Matthew Huber, Herbert Huppert, James Jackson, Sue Jackson, Philip Jones, Peter Killworth, Kevin Laland, Steve Lay, James Lighthill, Zheng Lin, Paul Linden, Shyeh Tjing Loi, Malcolm Longair, Jianhua Lü, James Maas, David MacKay, Normand MacLaurin, Niall Mansfield, David Marr, Nick McCave, Evelyn McFadden, Amy McGuire, Richard McIntyre, Ruth McIntyre, Steve Merrick, Gos Micklem, Alison Ming, Simon Mitton, Ali Mohebalhojeh, Ken Moody, Brian Moore, Walter Munk, Alice Oven, Tim Palmer, Antony Pay, Anthony Pearson, Tim Pedley, Sam Pegler, Max Perutz, Ray Pierrehumbert, Miriam Pollak, Vilayanur Ramachandran, Dan Rothman, Murry Salby, Adam Scaife, Nick Shackleton, Ted Shepherd, Adrian Simmons, Bill Simmons, Emily Shuckburgh, Luke Skinner, Appy Sluijs, David Spiegelhalter, Marilyn Strathern, Zoe Strimpel, Daphne Sulston, John Sulston, Stephen Thomson, Paul Valdes, Yixin Wan, Andy Watson, Ronald S. Watts, Estelle Wolfers, Flick Wolfers, Jeremy Wolfers, Jon Wolfers, Peter Wolfers, Eric Wolff, Toby Wood, Jim Woodhouse, and Laure Zanna. And I owe two very special debts of gratitude. One is to the clarinettist and conductor Antony Pay, a man of profound insight not only into the workings of music but also into science, humanity, and human nature. At a late stage he kindly read the entire manuscript and made many helpful suggestions. My other special debt is to Alice Oven, without whom this book would never have been written. As a young commissioning editor for World Scientific, it was she who first got me interested in embarking on this project, building on refs. 130. World Scientific’s Swee Cheng Lim, Jing Wen Soh, and Rok Ting Tan have helped in numerous ways with the task of seeing the book through to press.

— MEM

Cambridge, February 2023

Consider for a moment the following questions.

Good answers are important to our hopes of a civilized future; and many of the answers are surprisingly simple. But a quest to find them will soon encounter a conceptual and linguistic minefield, some of it around ideas like ‘innateness’ and ‘instinct’. Still, I think I can put us within reach of some good answers (with a small ‘a’) by recalling, first, some points about how our pre-human ancestors must have evolved — in a way that differs crucially from what popular culture says — and, second, some points about how we perceive and understand the world.

One reason for looking at evolution is the prevalence of misconceptions about it. Chief among them is the idea that natural selection works solely by competition between individuals. That ignores the many examples of cooperative behaviour among social animals, which Charles Darwin himself was at pains to point out.1 Saying that competition between individuals is all that matters flies in the face of this and much other evidence. It has also done great damage to human societies.2

On perception, understanding, and misunderstanding, and on our extraordinary language ability, it hardly needs saying that they were shaped by our ancestors’ evolution. Less obvious, however, is that the evolution must have depended not only on cooperation alongside competition but also, according to the best evidence, on a powerful feedback between genomic evolution and cultural evolution. For instance our language ability couldn’t have been suddenly invented around a hundred millennia ago, purely as a result of cultural evolution, as some researchers have argued. On the contrary, I’ll show in chapter 3 — drawing on clinching evidence from Nicaragua — that our language ability must have developed much more gradually, through the co-evolution of genomes and cultures with each affecting the other over a much longer timespan, probably millions of years.

Such co-evolution is necessarily a multi-timescale process. Multi-timescale processes are ubiquitous in the natural world. They’re found everywhere. They depend on strong feedbacks between different mechanisms over a large range of timescales. Here we have slow and fast mechanisms in the form of genomic evolution and cultural evolution. I’m using the word ‘cultural’ in a broad sense, to include everything passed on by social learning. Such feedbacks have often been neglected in the literature on biological evolution. Their likely importance for pre-human evolution and their multi-timescale aspects were, however, recognized and pointed out as long ago as 1971 by the great biologist Jacques Monod,3 and by the great palaeoanthropologist Phillip Tobias.4

Another theme in this book will be unconscious assumptions. It’s clear that such assumptions underlie, for instance, the polarized debates about ‘nature versus nurture’, ‘instinct versus learning’, ‘genomic evolution versus cultural evolution’, and so on. A gut feeling that evolution is either genomic or cultural, with each excluding the other, is typical. At a deeply unconscious level, it’s assumed that you can’t have both together. Further examples will come up in chapter 3. They’re germane to some notable scientific controversies.

The dichotomization instinct, as I’ll call it, the visceral push toward polarization — toward seeing all choices and distinctions as binary and exclusive — is by no means the only source of unconscious assumptions. Much more of what’s involved in perception and understanding, and in our general functioning, takes place unconsciously. Some people find this hard to accept. Perhaps they feel offended, in a personal way, to be told that the slightest aspect of their existence might, just possibly, not be under full and rigorous conscious control. A brilliant scientist whom I know personally as a colleague took offence in exactly that way, in a discussion we had on unconscious assumptions in science — even though the exposure of such assumptions is the usual way in which scientific knowledge improves, as history shows again and again, and even though I offered clear examples from our shared field of expertise.5

Many other examples are given in the book by Daniel Kahneman.6 My own favourite example is a very simple one, Gunnar Johansson’s ‘walking dots’ or ‘walking lights’ animation. Twelve moving dots in a two-dimensional plane are unconsciously assumed to represent a particular three-dimensional motion. When the dots are moving, everyone with normal vision sees a person walking. To see the animation, click on the black square:

Figure 1: On the left is a single frame from Gunnar Johansson’s ‘walking dots’ animation. The black square on the right is a link to display the animation (and a placeholder for an appropriate QR code in the published book). The animation shows a person walking from far right to near left. The walking dots phenomenon is a well studied classic in experimental psychology and is one of the most robust perceptual phenomena known. Animation constructed by Steve Lay from data kindly supplied by Professor James Maas.

And again, anyone who has driven cars, or flown aircraft, will probably remember occasions on which accidents were avoided ahead of conscious thought. The typical experience is often described as witnessing oneself taking, for instance, evasive action when faced with a head-on collision, or other life-threatening emergency. It is all over by the time conscious thinking has begun. It has happened to me, in cars and in gliders. I think such experiences are quite common. Kahneman gives an example from firefighting.6

Many years ago, the anthropologist-philosopher Gregory Bateson put the essential point succinctly, in classic evolutionary cost-benefit terms:7

No organism can afford to be conscious of matters with which it could deal at unconscious levels.

Gregory Bateson’s point applies to us as well as to other living organisms. Why? There’s a mathematical reason, combinatorial largeness. Every living organism has to deal all the time with a combinatorial tree, a combinatorially large number, of present and future possibilities. Each branching of possibilities multiplies, rather than adds to, the number of possibilities. Being conscious of all those possibilities would be almost infinitely costly.

Combinatorially large numbers are unimaginably large. No-one can feel their magnitudes intuitively. For instance the number of ways to shuffle a pack of 52 cards is 52 × 51 × 50 × ... × 3 × 2 × 1. That’s just over eighty million trillion trillion trillion trillion trillion.

The ‘instinctive’ avoidance of head-on collision in a car — the action taken ahead of conscious thought — is not, of course, something that comes exclusively from genetic memory. Learning is involved as well. The same goes for the way we see the walking dots animation. But much of that learning is itself unconscious, stretching back to the infantile groping that discovers the outside world and allows normal vision to develop.8 Far from being mutually exclusive, nature and nurture are intimately intertwined. That intimacy stretches even further back, to the genome within the embryo ‘discovering’ and interacting with its maternal environment.9 Jurassic Park is a great story, but scientifically wrong because you need dinosaur eggs as well as dinosaur DNA. Who knows, though — since birds are dinosaurs someone might manage it, one day, with reconstructed DNA and birds’ eggs.

My approach to questions like the foregoing comes from long experience as a scientist. Science was my main profession for fifty years or so. Although many branches of science interest me, my professional career was focused mainly on mathematical research to understand the highly complex, multi-timescale fluid dynamics of the Earth’s atmosphere and oceans. Included are phenomena such as the great jet streams and the air motion that shapes the ozone hole in the Antarctic stratosphere, and what are sometimes called the “world’s largest breaking waves”. Imagine a giant sideways breaker in the stratosphere the mere tip of which is almost as large as the entire USA. That research has in turn helped us, in an unexpected way, to understand the complex fluid dynamics and magnetic fields of something even more gigantic, the Sun’s interior. But long ago I almost became a musician. Or rather, in my youth I was, in fact, a part-time professional musician and could have made it into a full-time career. So I’ve had artistic preoccupations too, and artistic aspirations. This book tries to get at the deepest connections between all these things.

It’s obvious, isn’t it, that science, mathematics, and the arts are all of them bound up with the way perception works. That’ll be the central topic in chapter 4, where the walking dots will prove informative. And common to science, mathematics, and the arts is the creativity that leads to new understanding, the thrill of curiosity and lateral thinking, and sheer wonder at the whole phenomenon of life itself and at the astonishing Universe we live in.

One of the greatest of those wonders is our own adaptability, our versatility. Who knows, it might even get us through today’s crises, desperate though they might seem. We know that our hunter-gatherer ancestors were highly adaptable. They were driven again and again to migration and different ways of living by, among other things, rapid climate fluctuations — the legendary years of famine and years of plenty. How else did our species — a single, genetically-compatible species with its single human genome — spread around the globe in less than a hundred millennia? Chapter 3 will point to recent hard evidence for the sheer rapidity, and magnitude, of some of those climate fluctuations.

Chapter 3 will also point to recent advances in our understanding of biological evolution and natural selection, advances not yet assimilated into popular culture. One implication is that not only the nastiest but also the most compassionate, most cooperative parts of our makeup are ‘biological’ and deep-seated.2, 10, 11 There’s a popular misconception — yet another variation on the theme of nature ‘versus’ nurture — that our nastiest traits are exclusively biological and our nicest traits exclusively cultural. We’ll see that the evidence says otherwise.

Here, by the way, as in most of this book, I lay no claim to originality. For instance the evidence on past climates comes from the painstaking work of colleagues at the cutting edge of palaeoclimatology, including great scientists such as the late Nick Shackleton whom I had the privilege of knowing personally. And the points I’ll make about biological evolution rely on insights gleaned from colleagues at the cutting edge of biology, including the late John Sulston of human-genome fame, whom I also knew personally.

Our ancestors must have had not only language and lateral thinking — and music, dance, poetry, and storytelling — but also rhetoric, power games, blame games, genocide, ecstatic suicide and the rest. To survive, they must have had love and compassion too. The precise timespans and evolutionary pathways for these things are uncertain. But the timespans for at least some of them, including the beginnings of our language ability, must have been a million years or more to allow for the multi-timescale co-evolution of genomes and cultures.

As already suggested there’s been a tendency to neglect such co-evolution despite the ubiquity — the commonplace occurrence — of other multi-timescale processes in the natural world. Of these there’s a huge variety. To take one of the simplest examples, consider air pressure, as when pumping up a bicycle tyre. Fast molecular collisions mediate slow changes in air pressure, and air temperature, while pressure and temperature react back on collision rates and strengths. That’s a strong and crucial feedback across enormously different timescales.

So it never made sense to me to say that long and short timescales can’t interact. It never made sense to say that genomic evolution can have no interplay with cultural evolution just because the one is slow and the other is fast. And in particular it never made sense to argue from the archaeological record, as some researchers have, that language started around a hundred millennia ago as a purely cultural invention — the sudden invention of a single mother tongue from which today’s languages are all descended, purely by cultural transmission.12, 13 I’ll return to these points in chapter 3 and will try to argue them very carefully.

When considering the archaeological record it’s sometimes forgotten that language and culture can be mediated purely by sound waves and light waves and held in individuals’ memories — as in the Odyssey or in a multitude of other oral traditions, including Australian aboriginal songlines, the Japanese epic Tale of the Heike, and the many stories in what Laurens van der Post has called the immense wealth of the unwritten literature of Africa.14 That’s a very convenient, an eminently portable, form of culture for a tribe on the move. And sound waves and light waves are such ephemeral things. They have the annoying property of leaving no archaeological trace. But absence of evidence isn’t evidence of absence.

And now, in a mere flash of evolutionary time, a mere few centuries, we’ve shown our versatility and adaptability in ways that seem to me more astonishing than ever. We no longer panic at the sight of a comet. Demons in the air have shrunk to a small minority of alien abductors. We don’t burn witches and heretics, at least not literally. The Pope apologizes for past misdeeds. Genocide was avoided in South Africa. We even dare, sometimes, to tolerate individual propensities and lifestyles if they don’t harm others. We argue that tyrants needn’t always win. And guess what, they don’t always win. Indeed, recent reversals notwithstanding, governments have become less tyrannical and more democratic, on average, over the past two centuries, in what political scientist Samuel Huntington has called three waves of democratization15 despite the setbacks in between, and now. And most astonishing of all, since 1945 we’ve even had the good sense so far — and very much against the odds — to avoid warfare with nuclear weapons.

We’ve marvelled at the sight of our beautiful Earth poised above the lunar horizon. We have space-based observing systems, super-accurate clocks, and super-accurate global positioning, adding to the cross-checks on Einstein’s gravitational theory, also called general relativity. And now there’s yet another, very beautiful cross-check on the theory — detection of the lightspeed gravitational ripples it predicts.16 We have the Internet, bringing us new degrees of freedom and profligacy of information and misinformation. It presents us with new challenges to exercise critical thinking and to build computational systems and artificial intelligences of unprecedented power, and to use them for civilized purposes, exploiting the robustness and reliability growing out of the open-source software movement, “the collective IQ of thousands of individuals”.17 We can read and write genetic codes, and thanks to our collective IQ are beginning, just beginning, to understand them.18 On large and small scales we’ve been carrying out extraordinary new social experiments with labels like ‘market democracy’, ‘market autocracy’, ‘children’s democracy’19, ‘microlending’ conducive to population control,20 ‘citizen science’, and the burgeoning social media. With the weaponization of the social media now upon us — and the threats to democracy, privacy, and safety from, for instance, automated face recognition, reverse-image search, and deep-fake software21 — there’s a huge downside as with any new technology. But there’s also a huge upside, and everything to play for...

* * TEXT OMITTED FROM THIS PREVIEW * *

What makes life as a scientist worth living? For me, part of the answer is the joy of being open and honest.

There’s a scientific ideal and a scientific ethic that power good science. And they depend crucially on openness and honesty. If you stand up in front of a large conference and say of your favourite theory “I was wrong”, you gain respect rather than losing it. I’ve seen it happen. Your reputation increases. Why? The scientific ideal says that respect for evidence, for theoretical coherence and self-consistency, for cross-checking, for finding mistakes, for dealing with uncertainty and for improving our collective knowledge is more important than personal ego, or financial gain. And if someone else has found evidence that refutes your theory, then the scientific ethic requires you to say so publicly. The ethic says that you must not only be factually honest but must also give due credit to others, by name, whenever their contributions are relevant.

The scientific ideal and ethic are powerful because even when, as is inevitable, they’re followed only imperfectly, they encourage not only a healthy scepticism but also a healthy mixture of competition and cooperation. Just as in the open-source software community, the ideal and ethic harness the collective IQ, the collective brainpower, of large research communities in ways that can transcend even the power of individual greed and financial gain. The ozone-hole story is a case in point.

So too is the human-genome story with its promise of future scientific breakthroughs, including medical breakthroughs, calling for years and decades of collective research effort. The scientific ideal and ethic were powerful enough to keep the genomic information in the public domain — available for use in open research communities — despite an attempt to lock it up commercially that very nearly succeeded.26 Our collective brainpower will be crucial to solving the problems posed by the genome and the molecular-biological systems of which it forms a part, including the interplay with current and future pandemic diseases. Like so many other problems now confronting us, they are problems of the most formidable complexity.

In the Postlude I’ll return to the struggle between open science and the forces ranged against it, with particular reference to climate change, the most complex problem of them all. Again, there’s no claim to originality here. I merely aim to pick out, from the morass of confusion and misinformation surrounding the topic,24 some basic points clarifying where the uncertainties lie, as well as the near-certainties.

This book reflects my own journey toward the frontiers of human self-understanding. Of course many others have made such journeys. But in my case the journey began in a slightly unusual way.

Music and the arts were always part of my life. Music was pure magic to me as a small child. But the conscious journey began with a puzzle. While reading my students’ doctoral thesis drafts, and working as a scientific journal editor, managing the peer review of colleagues’ research papers, I began to wonder why lucidity, or clarity — in writing and speaking, as well as in thinking — is often found difficult to achieve. And I wondered why some of my colleagues are such surprisingly bad communicators, even within their own research communities, let alone on issues of public concern. Then I began to wonder what lucidity is, in a functional or operational sense. And then I began to suspect a deep connection with the way music works. Music is, after all, not only part of our culture but also part of our unconscious human nature.

I now like to understand the word ‘lucidity’ in a more general sense than usual. It’s not only about what you can find in style manuals and in books on how to write, excellent and useful though many of them are. (Strunk and White27 is a little gem.) It’s also about deep connections not only with music but also with mathematics, pattern perception, biological evolution, and science in general. A common thread is what I call the organic-change principle.

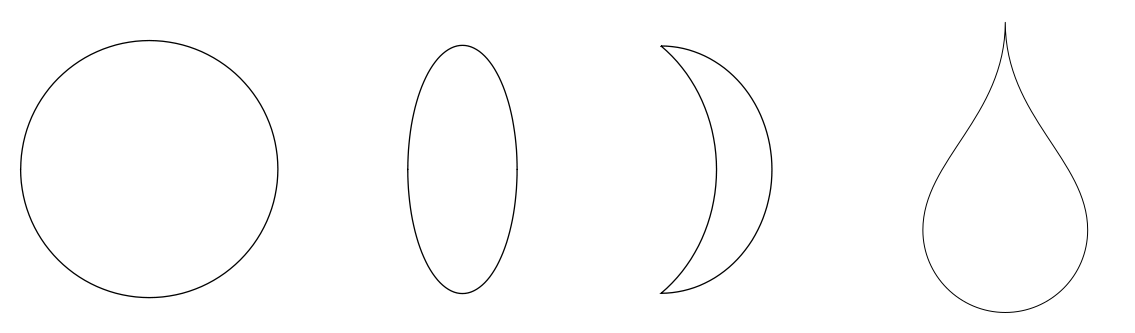

The principle says that we’re perceptually sensitive to, and have an unconscious interest in, patterns exhibiting ‘organic change’. These are patterns in which some things change, continuously or by small amounts, while others stay the same. So an organically-changing pattern has invariant elements.

The walking dots animation is an example. The invariant elements include the number of dots, always twelve dots. Musical harmony is another.

Musical harmony is an interesting case because ‘small amounts’ is relevant not in one, but in two different senses, as we’ll see in chapter 6. That leads to the idea of ‘musical hyperspace’. An organic chord progression, or harmony change, can take us somewhere that’s both nearby and far away. That’s how some of the magic is done, in many genres of Western music. An octave leap is a large change in one sense, but small in the other, indeed so small that musicians use the same name for the two pitches. The invariant elements in an organic harmony change can be pitches or chord shapes.

Music makes use of organically-changing sound patterns not just in its harmony, but also in its melodic shapes and counterpoint and in the overall form, or architecture, of an entire piece of music. That’s part of how it can grab our attention Mathematics, too, contains organically-changing patterns. In mathematics there are beautiful results about ‘invariants’ or ‘conserved quantities’, things that stay the same while other things change, often continuously through a vast space of possibilities. The great mathematician Emmy Noether discovered a common origin for many such results, through a profound and original piece of mathematical thinking. Her discovery is called Noether’s Theorem and is recognized today as a foundation-stone of theoretical physics.

Our perceptual sensitivity to organic change exists for strong biological reasons. One reason is the survival value of seeing the difference between living things and dead or inanimate things. To see a cat stalking a bird, or a flower opening, is to see organic change.

So I’d dare to describe our sensitivity to it as deeply instinctive. Many years ago I saw a pet kitten suddenly die of some mysterious but acute disease — a sudden freezing into stillness. I’d never seen death before, but I remember feeling instantly sure of what had happened — ahead of conscious thought. And the ability to see the difference between living and dead has been shown to be well developed in human infants a few months old.

Notice how intimately involved, in all this, are ideas of a very abstract kind. The idea of some things changing while others stay invariant is itself highly abstract, as well as simple. It’s abstract in the sense that vast numbers of possibilities are included. There are vast numbers — combinatorially large numbers — of organically-changing patterns, musical, mathematical, visual, and verbal. Here again we’re glimpsing the fact already hinted at, that the unconscious brain can handle many possibilities at once. We have an unconscious power of abstraction. That’s almost the same as saying that we have unconscious mathematics. Mathematics is a precise means of handling many possibilities, many patterns, at once, in a self-consistent way, and of discovering surprising interconnections between them.

The walking dots animation shows that we have unconscious Euclidean geometry, the mathematics of angles and distances. There are combinatorially large numbers of arrangements of objects, at various angles and distances from one another. The roots of mathematics and logic lie far deeper, and are evolutionarily far more ancient, than they’re usually thought to be. They’re hundreds of millions of years more ancient than archaeology might suggest. In chapter 6 I’ll show that our unconscious mathematics includes, also, the mathematics underlying Noether’s theorem, and I’ll show how all this is related to Plato’s world of perfect mathematical forms.

So I’ve been interested in lucidity, ‘lucidity principles’, and related matters in a sense that cuts deeper than, and goes far beyond, the niceties and pedantries of style manuals. But before anyone starts thinking that it’s all about Plato and ivory-tower philosophy, let’s remind ourselves of some harsh practical realities — as Plato would have done had he lived today. What I’m talking about is relevant not only to music, mathematics, thinking, and communication skills but also, for instance, to the ergonomic design of machinery, of software and user-friendly IT systems (information technology), of user interfaces in general and of technological systems of any kind — including the emerging artificial-intelligence systems, where the stakes are so incalculably high.

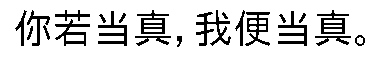

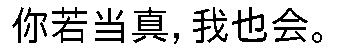

The organic-change principle — that we’re perceptually sensitive to organically-changing patterns — shows why good practice in any of these endeavours involves not only variation but also invariant elements, i.e., repeated elements, just as music does. Good control-panel or website design might use, for instance, repeated shapes for control knobs or buttons. And in writing and speaking one needn’t be afraid of repetition, if it forms the invariant element within an organically-changing word pattern. “If you are serious, then I’ll be serious” is a clearer and stronger sentence than “If you are serious, then I’ll be also.” Loss of the invariant element “serious” weakens the sentence. Still weaker are versions like “If you are serious, then I’ll be earnest.” Such pointless or gratuitous variation in place of repetition is what H. W. Fowler ironically called “elegant” variation, an “incurable vice” of second-rate writers.28 Its opposite can be called lucid repetition, as in “If you are serious, then I’ll be serious.” Lucid repetition is not the same as being repetitious. The pattern as a whole is changing, organically. It works the same way in every language I’ve looked at, including Chinese.29

Two more ‘lucidity principles’ are worth noting here. There’s an explicitness principle — the need to be more explicit than you feel necessary — because, obviously, you’re communicating with someone whose head isn’t full of what your own head is full of. As the great mathematician J. E. Littlewood once put it,30 “Two trivialities omitted can add up to an impasse.” Again, this applies to design in general, as well as to any form of writing or speaking that aims at lucidity. Quite often, all that’s needed is to use a noun, perhaps repeated, rather than a pronoun. With a website button marked ‘Cancel’ it helps to say what it cancels. And then there’s the more obvious coherent-ordering principle, the need to build context before new points are introduced. It applies not only to writing and speaking but also to the design of any sequential process on, for instance, a website or a ticket-vending machine.

One reason for attending to these principles is that human language is surprisingly weak on logic-checking, including checks for self-consistency.

That’s one of the reasons why language is such a conceptual minefield — something that’s long kept philosophers in business. And beyond everyday misunderstandings we have, of course, the workings of professional camouflage and deception, as in the ozone and other disinformation campaigns.

The logic-checking weakness shows up in the misnomers and self-contradictory terms encountered not only in everyday dealings but also — to my continual surprise — in the technical language used by my scientific and engineering colleagues...

* * TEXT OMITTED FROM THIS PREVIEW * *

What of my other question? What is this subtle and elusive thing we call understanding, or insight? What does it mean to think clearly about a problem?

Of course there are many answers, depending on one’s purpose and viewpoint. I’ll focus on scientific understanding.

What I’ve always found in my own research, and have always tried to suggest to my students, is that developing an in-depth scientific understanding of something — understanding in detail how it works — requires looking at it, and testing it, from as many different viewpoints as possible. That’s an important part of the creativity that goes into good science. And it puts a premium on good communication, including the ability to listen, actively, to someone offering a different viewpoint or focusing on a different aspect. Another part is to maintain a healthy scepticism, while respecting the evidence. And because it respects the evidence, such creativity is to be sharply distinguished from the postmodernist ‘anything goes’.

For instance, the multi-timescale fluid dynamics I’ve worked on professionally is far too complex to be understandable at all from a single viewpoint, such as the viewpoint provided by a particular set of mathematical equations. One needs a multi-modal approach with equations, words, pictures, and feelings all working together, as far as possible, to form a self-consistent whole with experiments and observations. And the equations themselves take different forms embodying different viewpoints, with technical names such as ‘variational’, ‘Eulerian’, ‘Lagrangian’, and so on. They’re mathematically equivalent but, as the great physicist Richard Feynman used to say, “psychologically very different”. I’ll give an example in chapter 6. Bringing in words, in a lucid way, is an important part of the whole but needs to be related to, and made consistent with, equations, pictures, and feelings.

Such multi-modal thinking and healthy scepticism have been the only ways I’ve known of escaping from the mindsets and unconscious assumptions that tend to entrap us, and of avoiding false dichotomies in particular. The history of science shows that escaping from unconscious mindsets has always been a key part of progress, as already remarked,5 including what Thomas Kuhn famously called paradigm shifts. And an important aid to cultivating a multi-modal view of any scientific problem is the habit of performing what Albert Einstein called thought-experiments, and mentally viewing those from as many angles as possible.

Einstein certainly talked about feeling things, in one’s imagination — forces, motion, colliding particles, light waves — and was always doing thought-experiments, mental what-if experiments if you prefer. The same thread runs through the testimonies of Richard Feynman and of other great scientists, such as Peter Medawar and Jacques Monod. It all goes back to juvenile play — that deadly serious rehearsal for real life — curious young animals and children pushing and pulling things (and people!) to see, and feel, how they work.

In my own research community I’ve often noticed colleagues having futile arguments about ‘the’ cause of some phenomenon. “It’s driven by such-and-such”, says one. “No, it’s driven by so-and-so”, says another. Sometimes the argument gets quite acrimonious. Often, though, they’re at cross-purposes because — perhaps unconsciously — they have different thought-experiments in mind.

Notice how the verb ‘to drive’ illustrates what I mean by language as a conceptual minefield. ‘Drive’ sounds incisive and clear-cut, but is nonetheless dangerously ambiguous. I sometimes think that our computers should make it flash red for danger, as soon as it’s typed, along with some other dangerously ambiguous words such as the pronoun ‘this’.

‘To drive’ can mean ‘to control’, as when driving a car, or controlling an audio amplifier via its input signal. But ‘to drive’ can also mean ‘to supply the energy needed’, via the fuel tank or the amplifier’s power supply. Well, there are two quite different thought-experiments here, on the amplifier let’s say. One is to change the input signal. The other is to switch the power off. A viewpoint focused on the power supply alone misses crucial aspects of the problem.

You may laugh, but there’s been a mindset in my research community that has, or used to have, precisely such a focus. It said that the way to understand our atmosphere and oceans is through their intricate ‘energy budgets’, disregarding questions of what they’re sensitive to. Yes, energy budgets are interesting and important, but no, they’re not the Answer to Everything. Energy budgets focus attention on the power supply, making an input signal look unimportant just because it’s small.

Instead of the verb ‘to drive’ it’s often helpful, I think, to use the verb ‘to mediate’, as in the biological literature where it usually points to an important part of some mechanism.

The topic of mindsets and unconscious assumptions has been illuminated not only through the work of Kahneman and Tversky6 but also through, for instance, that of Iain McGilchrist36 and Vilayanur Ramachandran.37 They bring in the workings of the brain’s left and right hemispheres. That’s a point to which I’ll return in chapter 4. In brief, the right hemisphere typically takes a holistic view of things and is more open to the unexpected, while the left hemisphere specializes in dissecting fine detail and is more prone to mindsets, including their unconscious aspects. The sort of scientific understanding I’m talking about — in-depth, multi-modal understanding — seems to involve an intricate collaboration between the two hemispheres, with each playing to its own very different strengths.

Conversely, if that collaboration is disrupted by brain damage, extreme forms of mindset can result. Clinical neurologists are familiar with a delusional mindset called anosognosia. Damage to the right hemisphere paralyses, for instance, a patient’s left arm, yet the patient vehemently denies that the arm is paralysed, and will make all sorts of excuses as to why he or she doesn’t fancy moving it when asked.

Back in the 1920s, the great physicist Max Born was immersed in the mind-blowing experience of developing quantum theory. Born later remarked that engagement with science and its healthy scepticism can give us an escape route from mindsets and unconscious assumptions. With the more dangerous kinds of zealotry or fundamentalism in mind, he said38

“I believe that ideas such as absolute certitude, absolute exactness, final truth, etc., are figments of the imagination which should not be admissible in any field of science... This loosening of thinking [Lockerung des Denkens] seems to me to be the greatest blessing which modern science has given to us. For the belief in a single truth and in being the possessor thereof is the root cause of all evil in the world.”

Further wisdom on these topics can be found in, for instance, the classic study of fundamentalist cults by Flo Conway and Jim Siegelman.39 It echoes religious wars over the centuries. Time will tell, perhaps, how the dangers from the fundamentalist religions compare with those from the fundamentalist atheisms. Among today’s fundamentalist atheisms we have not only scientific fundamentalism, saying that Science Is the Answer to Everything and Religion Must Be Destroyed — provoking a needless backlash against science, sometimes violent — but also, for instance, atheist versions of what economists now call market fundamentalism.2, 25

Market fundamentalism is arguably the most dangerous of all because of its financial and political power, still remarkably strong in today’s world. I don’t mean Adam Smith’s reasonable idea that market forces and profits are useful, in symbiosis with the division of labour and good regulation.25 Smith was clear about the need for regulation, written or unwritten.40 I don’t mean the business entrepreneurship that can provide us with useful goods and services. By market fundamentalism I mean the hypercredulous belief, the taking-for-granted, the simplistic and indeed incoherent mindset that market forces are by themselves the Answer to Everything, when based solely on ‘deregulation’ and the maximization of individual profit — regardless of evidence like the 2008 financial crash. Some adherents consider their beliefs ‘scientifically’ justified through the idea, which they wrongly attribute to Darwin, that competition between individuals is all that matters.1 That last idea isn’t, I should add, exclusive to the so-called political right.2

Understanding market fundamentalism is important because of its tendency to promote not only financial but also social instability, not least through gross economic inequality.2 And the financial power of market fundamentalism makes it one of the greatest threats to good science, and indeed to rational problem-solving of any kind because, for a true believer, individual profit is paramount, taking precedence over respect for evidence — evidence about financial and social stability, or mental health, or pandemic viruses, or biodiversity, or the ozone hole or climate or anything else. The point is underlined by the investigations in refs. 24 and 41.

Common to all forms of fundamentalism, or puritanism, or extremism is that besides ignoring or cherry-picking evidence they forbid the loosening of thinking that allows freedom to view things from more than one angle. Only one viewpoint is permitted, for otherwise you are ‘impure’. You’re commanded to have tunnel vision. The 2008 financial crash seems to have made only a small dent in market fundamentalism, so far, though perhaps reducing the numbers of its adherents. Perhaps the COVID-19 pandemic will make a bigger dent. It’s too early to say. And what’s called ‘science versus religion’ is not, it seems to me, about scientific insight versus religious, or spiritual, insight. Rather, it’s about scientific fundamentalism versus religious fundamentalism, which of course are irreconcilable.

Such futile dichotomizations cry out for more loosening of thinking. How can such loosening work? As Ramachandran or McGilchrist might say, it’s almost as if the right brain hemisphere nudges the left with a wordless message to the effect that ‘You might be sure, but I smell a rat: could you, just possibly, be missing something?’

It’s well known that in 1983 a Russian officer, Stanislav Petrov, saved us from likely nuclear war. At great personal cost, he disobeyed standing orders when a malfunctioning weapons system said ‘nuclear attack imminent’. He smelt a rat and we had a narrow escape. We probably owe it to Petrov’s right hemisphere. There have been other such escapes.

Let’s fast-rewind to a few million years ago, and further consider our ancestors’ evolution. Where did we, our insights, and our mindsets come from? And how on Earth did we acquire our language ability — that vast conceptual minefield — so powerful, so versatile, yet so weak on logic-checking? These questions are more than just tantalizing. Clearly they’re germane to past and present conflicts, and to future risks including existential risks.

The first obstacle to understanding is what I’ll dare — following a suggestion by John Sulston — to call simplistic evolutionary theory. The theory is still firmly entrenched in popular culture, with labels like ‘Darwinian struggle’. Many biologists would now agree with John that the theory is no more than a caricature. But it’s a remarkably persistent caricature. It’s still hugely influential. It includes the idea that competition between individuals is all that matters.

More precisely, simplistic evolutionary theory says that evolution has just three aspects. First, the structure of an organism is governed entirely by its genome, acting as a deterministic ‘blueprint’ made of all-powerful ‘selfish genes’. Second, contrary to what Charles Darwin thought,18 natural selection is the only significant evolutionary force. And third, natural selection works through ‘survival of the fittest’, conceived of solely in terms of a struggle between individuals.

Survival of the fittest would be a reasonable proposition were it not that an oversimplified notion of fitness is used. Not only is fitness presumed to apply solely to individual organisms, but it’s also presumed to mean nothing more than the individual’s ability to pass on its genes. Admittedly this purely competitive, purely individualistic view does explain much of what happens in our planet’s astonishing biosphere. But it also misses many crucial points. It’s not the evolutionary Answer to Everything.

There’s a slightly more sophisticated view called ‘inclusive fitness’ or ‘kin selection’, which replaces individuals by families whose members share enough genes to count as closely related. But it misses the same points.

For one thing, as Darwin recognized, our species and many other social species, such as baboons, could not have survived without cooperation within large groups. Without such cooperation, alongside competition, our ground-dwelling ancestors would have been easy meals for the large, swift predators all around them, including the big cats — gobbled up in no time at all! Cooperation restricted to a few closely related individuals would not have been enough to survive those dangers. And Darwin gives clear examples in which cooperation within large non-human groups is, in fact, observed to take place, as for instance with the geladas and the hamadryas baboons of Ethiopia.1

Even bacteria cooperate. That’s well known. One way they do it is by sharing small packages of genetic information called plasmids or DNA cassettes. A plasmid might for instance contain information on how to survive antibiotic attack. Don’t get me wrong. I’m not saying that bacteria ‘think’ like us, or like baboons or dolphins or other social mammals, or like social insects. And I’m not saying that bacteria never compete. They often do. But for instance it’s a hard fact — a practical certainty, and now an urgent problem in medicine — that large groups of individual bacteria cooperate among themselves to develop resistance to antibiotics. For the bacteria, such resistance is highly adaptive, and strongly selected for. Yes, selective pressures are at work, but at group level as well as at individual and kin level, and at cellular and molecular level,18 in heterogeneous populations living in heterogeneous, and ever-changing, ecological environments.

So it’s plain that natural selection operates at many levels within the biosphere, and that cooperation is widespread alongside competition. Indeed the word ‘symbiosis’ in its standard meaning denotes a variety of intimate, and well studied, forms of cooperation not between individuals of one species but between those of entirely different species. And different species of bacteria share plasmids.42 The trouble is the sheer complexity of it all — again a matter of combinatorial largeness as well as of population heterogeneity, and of the complexities of mutual fitness in and around various ecological niches. We’re far from having comprehensive mathematical models of how it all works.

On the other hand, though, the models have made great progress in recent decades, aided by increasing computer power. There have been significant advances at molecular level.18 They’ve added to the accumulated evidence for what’s now called multi-level natural selection or, for brevity, multi-level selection.43–55 The evidence comes not only from better models at various levels but also, for instance, from laboratory experiments with heterogeneous populations of real organisms, directly demonstrating group-level selection.43

The persistence of simplistic evolutionary theory, oblivious to all these considerations, seems to be bound up with a particular pair of mindsets. The first says that the genes’ eye view — or, more fundamentally, the replicators’ or DNA’s eye view — gives us the only useful angle from which to view evolution. The second reiterates that selective pressures operate at one level only, that of individual organisms. The first mindset misses the value of viewing a problem from more than one angle. The second misses most of the real complexity. And that complexity includes not only group-level selective pressures as demonstrated in the laboratory,43 but also the group-level selective pressures on our ancestors noted by biologists such as Jacques Monod3 and by palaeoanthropologists such as Phillip Tobias,4 Robin Dunbar,46 and Matt Rossano48 to name but a few.

Both mindsets seem to have come from mathematical models that are grossly oversimplified by today’s standards, valuable though they were in their time. They are the old population-genetics models that were first formulated in the early twentieth century44 and then further developed in the 1960s and 1970s. For the sake of mathematical simplicity and solvability those models exclude, by assumption, all the aforementioned complexities as well as multi-timescale processes and, in particular, realistic group-level selection scenarios.43, 52, 54 And the hypothetical ‘genes’ in those models are themselves grossly oversimplified. They correspond to the popular idea of a gene ‘for’ this or that trait — nothing like actual genes, the protein-coding sequences within the genomic DNA. Very many actual genes are involved, usually, in the development of a recognizable trait, along with non-coding parts of the DNA, the associated regulatory networks, and the environmental circumstances.3, 9, 18

* * TEXT OMITTED FROM THIS PREVIEW * *

And what of the changing climate that our ancestors had to cope with? Over the timespan of figure 2, the climate system underwent increasingly large fluctuations some of which were very sudden, as will be illustrated shortly, drastically affecting our ancestors’ food supplies and living conditions. In the later stages, which culminated in the runaway brain evolution and global-scale migration of our species, the increasing climate fluctuations would have been ramping up the pressure to develop tribal solidarity and versatility mediated by ever more elaborate mythologies, rituals, songs, and stories passed from generation to generation.

And what stories they must have been! Great sagas etched into a tribe’s collective memory. It can hardly be accidental that the sagas known today tell of years of famine and years of plenty, of battles, of epic journeys, of great floods, and of terrifying deities that are both fickle benefactors and devouring monsters — just as the surrounding large predators must have appeared to our early ancestors, as those ancestors scavenged on leftover carcasses long before becoming top predators themselves.67 And epic journeys and great floods must have been increasingly part of our ancestors’ struggle to survive, as they migrated under the increasingly changeable climatic conditions...

* * TEXT OMITTED FROM THIS PREVIEW * *

To survive all this, our ancestors must have had not just tribal solidarity but also, at least in times of crisis, strong leaders and willing followers. Hypercredulity and weak logic-checking must have had a role — selected for as genome and culture co-evolved and as language became more sophisticated, and more fluent and imaginative with stories of the natural and the supernatural.

How do you make leadership work? Do you make a reasoned case? Do you ask your followers to check your logic? Do you check it yourself? Of course not! You’re a leader because, with your people starving, you’ve emerged as a charismatic visionary. You’re divinely inspired. Your people love you. You know you’re right, and it doesn’t need checking. You have the Answer to Everything. “O my people, I’ve been shown the True Path that we must follow. Come with me! Let’s make our tribe great again! Beyond those mountains, over that horizon, that’s where we’ll find our Promised Land. It is our destiny to find that Land and overcome all enemies because we, and only we, are the True Believers. Our stories are the only true stories.” How else, in the incessantly-fluctuating climate, I ask again, did our one species — our single human genome — spread all around the globe in less than a hundred millennia?

And what of dichotomization — that ever-present, ever-potent source of unconscious assumptions — assumptions that are so often wrong in today’s world? Well, it’s even more ancient, isn’t it. Hundreds of millions of years more ancient. Ever since the Cambrian, half a billion years ago, survival has teetered on the brink of edible or inedible, male or female, friend or foe, and fight or flight. In life-threatening emergencies, binary snap decisions were crucial. But with language and hypercredulity in place, the dichotomization instinct — rooted in the most ancient, the most primitive, the most reptilian parts of our brains — could grow into new forms. Not just friend or foe but also We are right and they are wrong. It’s the Absolute Truth of our belief system versus the absolute falsehood of theirs, with nothing in between.

You might be tempted to dismiss the last point as a mere ‘just so story’, speculation unsupported by evidence. But I’d argue that there’s plenty of evidence today. One clear line of evidence is the ease with which polarized conflicts are amplified, expanded, and intensified by the social media, despite the immense harm that they cause.

There’s also the evidence documented in the book by evolutionary biologist David Sloan Wilson.43 This carefully argued book includes case studies of fundamentalist or puritanical belief systems — religious in chapter 6, and atheist in chapter 7. For instance Ayn Rand, an atheist prophet revered for her preaching of market fundamentalism, held that selfishness is an absolute good and altruism an absolute evil. Personal greed at others’ expense is the Answer to Everything, and the pinnacle of moral virtue. Wilson describes how a well-intentioned believer, Rand’s disciple Alan Greenspan, was “dumbfounded” by the 2008 financial crash shortly after his long reign at the US Federal Reserve Bank. How could a system based on Rand's gospel fail so abysmally? By a supreme irony, Rand’s gospel also says not only that ‘We are right and they are wrong’ but also that ‘We are rational and they are irrational’. Any logic-checking that considers an alternative viewpoint is ‘irrational’, something to be dismissed out of hand.

It’s the same for any other puritanical mindset. Only one viewpoint is permitted; and unless you dismiss the alternatives immediately, without stopping to think, you’re impure, aren’t you. You’re a ditherer, a lily-livered moral weakling. You’re in danger of sending a heretical ‘mixed message’ when it’s all about Us Versus Them, Truth Versus Falsehood, Good Versus Evil. That’s the force behind the so-called ‘purity spirals’ seen on social media, in which reasoned debates turn into shouting matches between increasingly polarized factions.

* * *

Yes, dichotomization makes us stupid. Luckily, however, dichotomization isn’t all there is. We don’t have to see the world this way. It’s a trap we needn’t fall into. Outside life-threatening emergencies, and far more than your average reptile, we do have the ability to stop and think. We do have the ability to see that while some issues are dichotomous many others are not. We do have the ability to see — oh shock horror — that there might be merit in different viewpoints, helping us to solve real problems. And our hopes of a civilized future will depend, it seems to me, upon strengthening those thinking abilities. In the first edition of this book I ventured to hope that, surprising though it may seem, the social-media giants might, in their own self-interest, help with such strengthening.

For many years now the social-media giants, beginning with Facebook, have amassed vast wealth and supranational power by using the artificial intelligences, the robots, that they’ve built and trained within their secret ‘large hadron collider of experimental psychology’ as I called it — experimentation on billions of human subjects.23 But even today those robots are not yet very intelligent! In some ways they’re downright stupid. Why do I say that? Because, as currently set up, they pose an existential threat to their own survival along with that of the social media themselves, in their present-day entrepreneurial form.

Part of the threat comes from the way the robots have been trained to exploit the dichotomization instinct, alongside other primitive instincts such as fear, anger, and hatred, to make things go viral — reaching vast audiences and raking in huge profits from advertising revenue. Like or dislike, friend or unfriend, follow or don’t follow, share or don’t share, include or exclude, and so on, are not only addictive, attention grabbing data-gathering devices but also, in their own way, examples of what I called ‘perilous binary buttons’. Press or don’t press, and don’t stop to think. In this and in other ways of shutting off thinking the social-media robots have become, among other things, powerful disinformation-for-profit and hatred-for-profit machines. On a massive and unprecedented scale they’ve amplified fear, anger, hatred, and mental illness, and spread `post-truth' confusion in the form of many alternative ‘realities’.35 Stories that don’t let facts get in the way are much easier to compose than accurate, fact-based stories and — when they’re dramatic, and emotionally charged — are much better at grabbing attention. The more it’s emotionally charged, the faster it spreads, making it more profitable — and more politically exploitable.

All this has created a new threat to democratic social stability that adds to the older, ongoing threat from gross economic inequality.2 Among their billions of users the robots, functioning at lightning speed, far outstripping the snail’s pace of human moderators, have amplified and expanded the purity spirals, the divisive rhetoric and hatespeech, the misinformed echo chambers, the filter bubbles and preference bubbles, the confusion, and the sheer anger, that’s been turbocharging the vicious binary politics and culture wars we see all around us. Ref. 72 describes recent statistical studies of these phenomena.

It’s almost as if the social-media giants have been bent on self-destruction. The turbocharged binary politics looks set to replace democracy by brutal autocracy even faster than in the 1920s and 1930s — and this time even at home, even at the nerve centre of the entrepreneurial social media, even in freedom-loving Silicon Valley. Autocracy would destroy the private autonomy of enterprises like Facebook, Meta, and Google. It would destroy the freewheeling, freedom-loving, democratic business environment to which they owe their vast wealth and power. Please don’t get me wrong. The social media have their upside and have brought great benefits of many kinds, such as useful networking and the videos showing kids how to make and repair things — to say nothing of new ways to resist autocracy and to aid high-quality investigative journalism. The threat to democratic social stability was no doubt unintended. But that doesn’t make the threat less real, and less imminent.

The social-media technocrats must surely have recognized their peril, whatever their financial bosses might think. The technocrats aren’t themselves stupid. There’s a good chance, I think, that they’re trying to make their robots smarter and less socially destabilizing, difficult though the task may be, and difficult though it may be to overcome the short-term profit incentive for destabilization and difficult though it may be — on the technical side — to develop robot-assisted moderation at lightning speed. Social scientists are coming up with further ideas that might help,72 as might new experiments with open-source social media structures like Mastodon, which aim to steer clear of the profit incentive. And the storming of the US Capitol on 6 January 2021 and its threat to turn the USA into an autocracy must, surely, have focused minds, along with the arrival of the new deep-fake disinformation and character-assassination techniques.21

Smarter robots could be a game-changer in helping us humans to get smarter too. They could help us to escape from mindsets instead of being trapped by them. With humans and robots there’s a dystopian mindset that ‘they’ll take over’. But that’s yet another binary trap, another Us Versus Them, based on the ‘singularity fallacy’ — the unconscious assumption that there’s just one kind of intelligence and just one measure of intelligence, putting robots into direct competition with humans. When, instead, humans and robots work together on solving problems, with each playing to their own very different strengths, the combination can become much more powerful and exciting than either alone.

A robot can, so to speak, take on the role of a third ‘brain hemisphere’ to help with problem-solving. Included might even be the problem of maintaining democratic social stability, in all its daunting complexity. Instead of shutting off our thinking, robots could help to open it up. They could, if incentivized in new ways, help us to see things from more than one angle.

An early example was the famous work of the DeepMind team led by Demis Hassabis, with a robot called AlphaGo. In learning to play the combinatorially large game of Go, it discovered winning game patterns that no human had thought of. And now we have its descendant AlphaFold, which in 2020 made a breakthrough toward solving the combinatorially large, and scientifically important, problem of protein folding55 — the problem of deducing the three-dimensional shapes of protein molecules solely from a knowledge of their DNA, hence amino-acid, sequences in cases where the sequence fixes the shape. One of the robots’ special strengths is complex pattern recognition within vast sets of possibilities.

The most powerful robots are in some ways, within their limitations, a bit like precocious children. They work by being open to learning. As with human children, we need to get to know them and to get better at teaching them and, above all, better at choosing what to teach them and how best to incentivize them. Should we keep on pushing them to amplify the patterns of social instability, just because it’s lucrative in the short term? Is that a smart thing to do? Or should we push them instead to encourage flexible, versatile lateral thinking, and critical thinking, helping to expose things that might surprise us and even make us a bit uncomfortable? Could they help us to become more skilful and adaptable in future? AlphaGo and AlphaFold suggest that the answer is, in principle at least, a resounding yes.

We can engage with our own children without knowing the wiring diagrams and patterns of plasticity within their brains. Similarly, we can engage with our robots without knowing what their millions of lines of self-generated computer code look like. And we can get them to help reinforce the more civilized human instincts rather than, as at present, mostly the nastier ones to boost profits. They could for instance do a better job on learning the ever-evolving patterns and contexts of hatespeech and catching it, at lightning speed, before it spreads...

* * TEXT OMITTED FROM THIS PREVIEW * *

Picture a typical domestic scene. “You interrupted me!” “No, you interrupted me!”

Such stalemates can arise from the fact that perceived times differ from actual physical times in the outside world, as measured by clocks.74 I once tested this experimentally by secretly tape-recording a dinner-table conversation. At one point I was quite sure that my wife had interrupted me, and she was equally sure it had been the other way round. When I listened afterwards to the tape, I discovered to my chagrin that she’d been right. She had started to speak a small fraction of a second before I did.

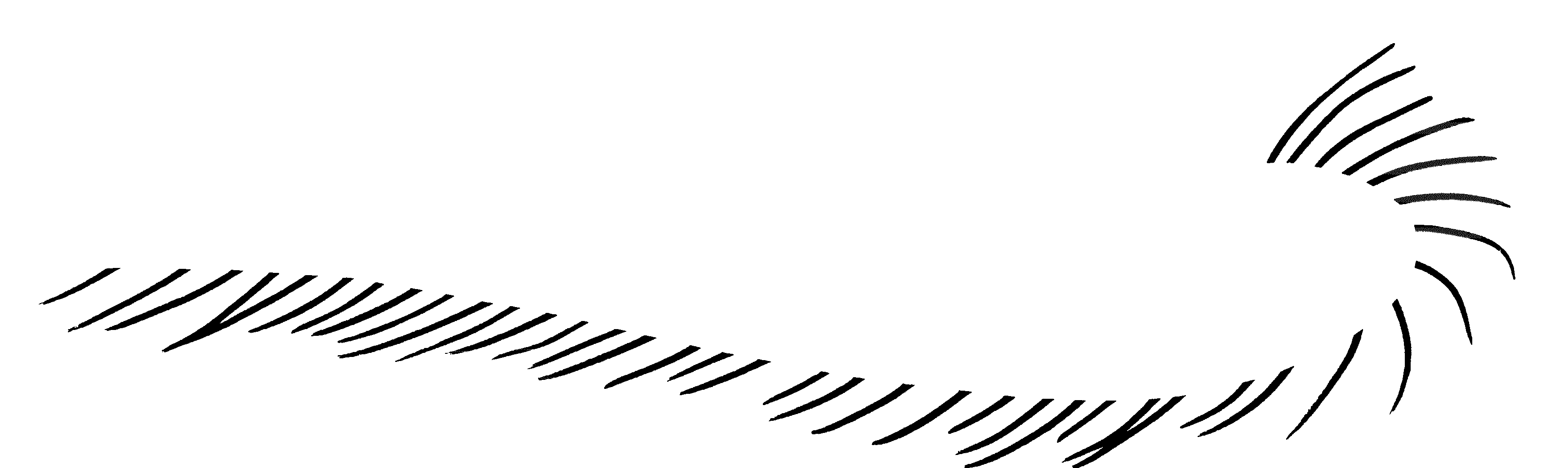

Musical training includes learning to cope with the differences between perceived times and actual times. For instance musicians often check themselves with a metronome, a small machine that emits precisely regular clicks. The final performance won’t necessarily be metronomic, but practising with a metronome helps to remove inadvertent errors in the fine control of rhythm. “It don’t mean a thing if it ain’t got that swing...”

There are many other examples. I once heard a radio interviewee recalling how he’d suddenly got into a gunfight: “It all went intuh slowww — motion.” Another example is that of the jazz saxophonist Tony Kofi. At age 16 he fell three stories from a roof-repair job. He describes how he experienced the fall in slow motion, and on the way down had visions of unknown faces and places and saw himself playing an instrument. It was a life-changing experience that made him into a musician.

A scientist who claims to know that eternal life is impossible has failed to notice that perceived timespans at death might stretch to infinity. That, by the way, is a simple example of the limitations of science. What might or might not happen to perceived time at death is a question outside the scope of science, because it’s outside the scope of experiment and observation. It’s here that ancient religious teachings show more wisdom, I think, when they say that deathbed compassion and reconciliation are important to us. Perhaps I should add that, as hinted earlier, I’m not myself conventionally religious. I’m an agnostic whose closest approach to the numinous — to the transcendent, to the divine if you will — has been through music.

Some properties of perceived time are very counterintuitive indeed. They’ve caused much conceptual and philosophical confusion. Besides the ‘slow motion’ experiences there are also, for instance, experiences in which the perceived time of an event precedes the arrival of the sensory data defining the event, sometimes by as much as several hundred milliseconds. At first sight this seems crazy, and in conflict with the laws of physics. Those laws include the principle that cause precedes effect. But the causality principle in physics refers to actual times in the outside world, not to perceived times. The apparent conflict is a perceptual illusion. I’ll call it an ‘acausality illusion’.75

The existence of acausality illusions — of which music provides outstandingly clear examples, as we’ll see shortly — can be understood from the way perception works. And the way perception works is well illustrated by the walking dots animation (figure 1).

Consider for a moment what the animation tells us. The sensory data are twelve moving dots in a two-dimensional plane. But they’re seen by anyone with normal vision as a person walking — as a particular three-dimensional motion exhibiting organic change. The invariant elements include the number of dots. Also invariant are the distances, in three-dimensional space, between pairs of locations corresponding to particular pairs of dots. There’s no way to make sense of this except to say that the unconscious brain fits to the data an organically-changing internal model that represents the three-dimensional motion, using an unconscious knowledge of three-dimensional Euclidean geometry.

That by the way is what Kahneman6 calls a ‘fast’ cognitive process, something that happens ahead of conscious thought, and outside our volition. Despite knowing that it’s only twelve moving dots, we have no choice but to see a person walking.

Such model-fitting has long been recognized by psychologists as an active process involving unconscious prior probabilities, and top-down as well as bottom-up flows of information.37, 76, 77 The ‘top-down’ flow comes from the brain’s repertoire of internal models, with their unconscious prior probabilities. ‘Bottom-up’ refers to the incoming data. For the walking dots the greatest prior probabilities are assigned to a particular class of three-dimensional motions, privileging them over other ways of creating the same two-dimensional dot motion. The active, top-down, model-fitting aspects also show up in neurophysiological studies.78

The term pattern-seeking is sometimes used to suggest the active nature of the unconscious model-fitting process. So active is our unconscious pattern-seeking that we’re prone to what psychologists call pareidolia, seeing patterns in random images. (People see the devil’s face in a thundercloud, then form a conspiracy theory that the government covered it up.) For the walking dots the significant pattern is four-dimensional, involving as it does the time dimension as well as all three space dimensions. Without the animation, one tends to see no more than a bunch of dots.

And what is a ‘model’? In the sense I’m using the word, it’s a partial and approximate representation of reality, or presumed reality. “All models are wrong, but some are useful.” And the most useful models are not only representations, but also ‘prediction engines’ giving a sense of what’s likely to happen next, such as whether or not the person walking will take a further step.

Models are made in a variety of ways. They’re usually made with symbols of one sort or another. The internal model evoked by the walking dots is made by activating some neural circuitry. Patterns of neural activity are symbols. The objects appearing in video games and virtual-reality simulations are models made of electronic circuits and computer code. Computer code is made of symbols. Children’s model boats and houses are made of real materials but are, indeed, models as well as real objects — partial and approximate representations of real boats and houses. Population-genetics models are made of mathematical equations, and computer code usually. So too are models of photons, of air molecules, of black holes, of lightspeed gravitational ripples, and of jet streams and the ozone hole. Any of these models can be more or less accurate, more or less detailed, and more or less predictive. But they’re all partial and approximate. And nearly all of them are made of symbols.

So ordinary perception, in particular, works by model-fitting. Paradoxical and counterintuitive though it may seem, the thing we perceive is — and can only be — the unconsciously-fitted internal model. And the model has to be partial and approximate because our neural processing power is finite. The whole thing is counterintuitive because it goes against our subjective visual experience of outside-world reality — as not just self-evidently external, but also as direct, clear-cut, unambiguous, and seemingly exact in many cases.

Indeed, that experience is sometimes called ‘veridical’ perception, as if it were perfectly accurate. One often has an impression of sharply-outlined exactness — with such things as the delicate shape of a bee’s wing or a flower petal, the precise geometrical curve of a hanging dewdrop, the sharp edge of the full moon or the sea on a clear day and the magnificence, the sharply-defined jaggedness, of snowy mountain peaks against a clear blue sky.79

Right now I’m using the word ‘reality’ to mean the outside world. Also, I’m assuming that the outside world exists. I’m making that assumption consciously as well as, of course, unconsciously. Notice by the way that ‘reality’ is itself another dangerously ambiguous word. It’s another source of conceptual and philosophical confusion...

* * TEXT OMITTED FROM THIS PREVIEW * *

The use of echolocation by bats, whales and dolphins is a variation on the same theme. For bats, too, the perceived reality must be the internal model — not the echoes themselves, but a symbolic representation of the bat in its surroundings. It must work in much the same way as our vision except that the bat provides its own illumination. To start answering the famous question ‘what is it like to be a bat’ we could do worse than imagine seeing in the dark with a flashing floodlight, whose rate of flashing can be increased at will.

And what is it like to be an octopus? Like any other animal, an octopus needs to be well oriented in its surroundings. So regardless of brain anatomy it needs a single self-model, and an accompanying perception of ‘self’ — not the absurdity of eight or nine separate ‘selves’ as has sometimes been suggested. The anatomy of an octopus, whose brain is spread out across its eight arms, is irrelevant to the question. An entirely different question is where to focus your attention. For us humans, we can focus on what our left fingers and right toes are doing. So I daresay an octopus can focus on what its fifth and seventh arms are doing.

And what of the brain’s two hemispheres? Here I must defer to McGilchrist36 and Ramachandran,37 who in their different ways offer a rich depth of understanding coming from neuroscience and neuropsychiatry, far transcending the superficialities of popular culture. For present purposes, McGilchrist’s key point is that having two hemispheres is evolutionarily ancient. Even fish have them. The two hemispheres may have begun with bilateral symmetry in primitive vertebrates but then evolved in different directions. If so, it would be a good example of how neutral genomic changes can later become adaptive.18

A good reason to expect such bilateral differentiation, McGilchrist argues, is that survival is helped by having two different styles of perception. They might be called holistic and fragmented. The evidence shows that the first, holistic style is a specialty of the right hemisphere, and the second a specialty of the left, or vice versa in a minority of people.